Generative AI in Practice: Hybrid Workflows for Commercial Design

Brian Hull leads the exploration of safe, scalable applications of generative AI in commercial content creation—developing new workflows that integrate tools like Midjourney, Firefly, and Mercury Language Labs to enhance design, motion, and storytelling at speed and scale. Designed to increase operational efficiency, reduce production time, and improve creative ROI, this work reflects a hands-on approach to innovation rooted in pragmatism, brand safety, and real-world value.

Below, explore early-stage outputs, ethical considerations, and applied use cases demonstrating how generative AI is being tested and translated into production-ready, team-augmenting solutions.

[View the full presentation →]

Making GenAI Work for Brands

The Approach and Outcomes

Client Benefits

Accelerated concept development across multiple creative directions

Up to 35% faster asset creation timelines

Maintained quality through human oversight

Increased cost-efficiency through streamlined workflows

Key Tools & Platforms

Copy: Proprietary Mercury Language Lab, Google Gemini, Notebook LM

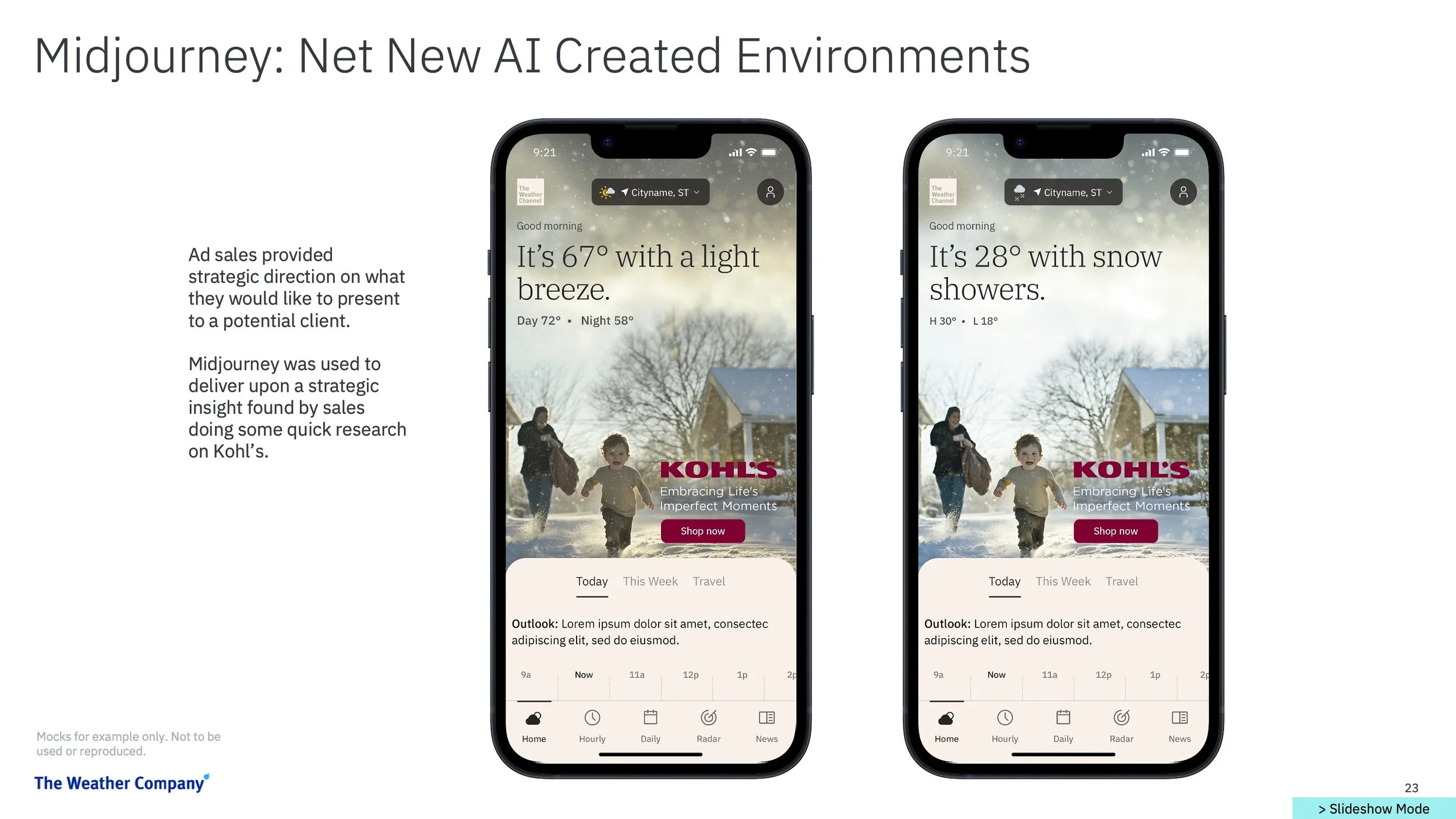

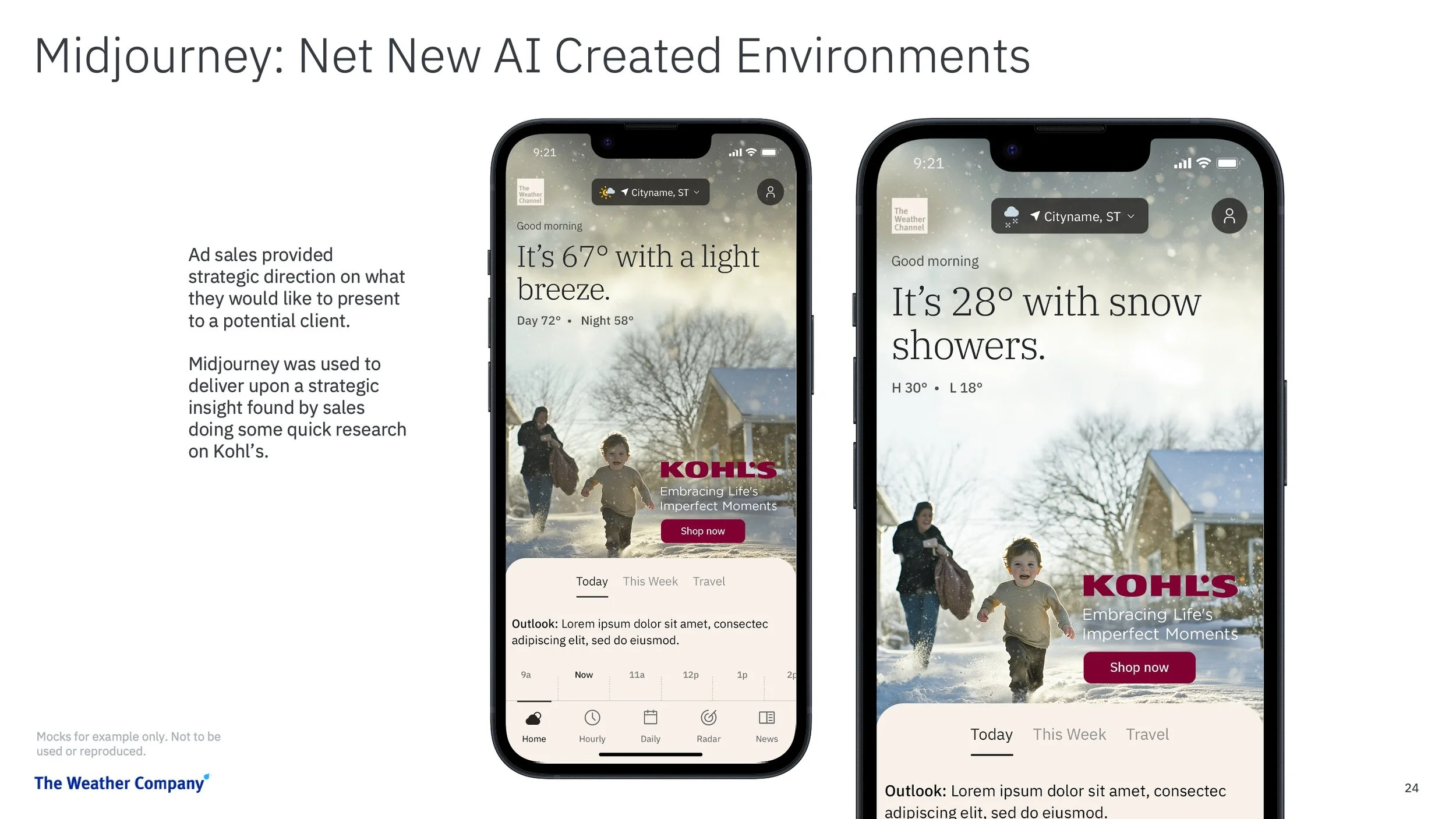

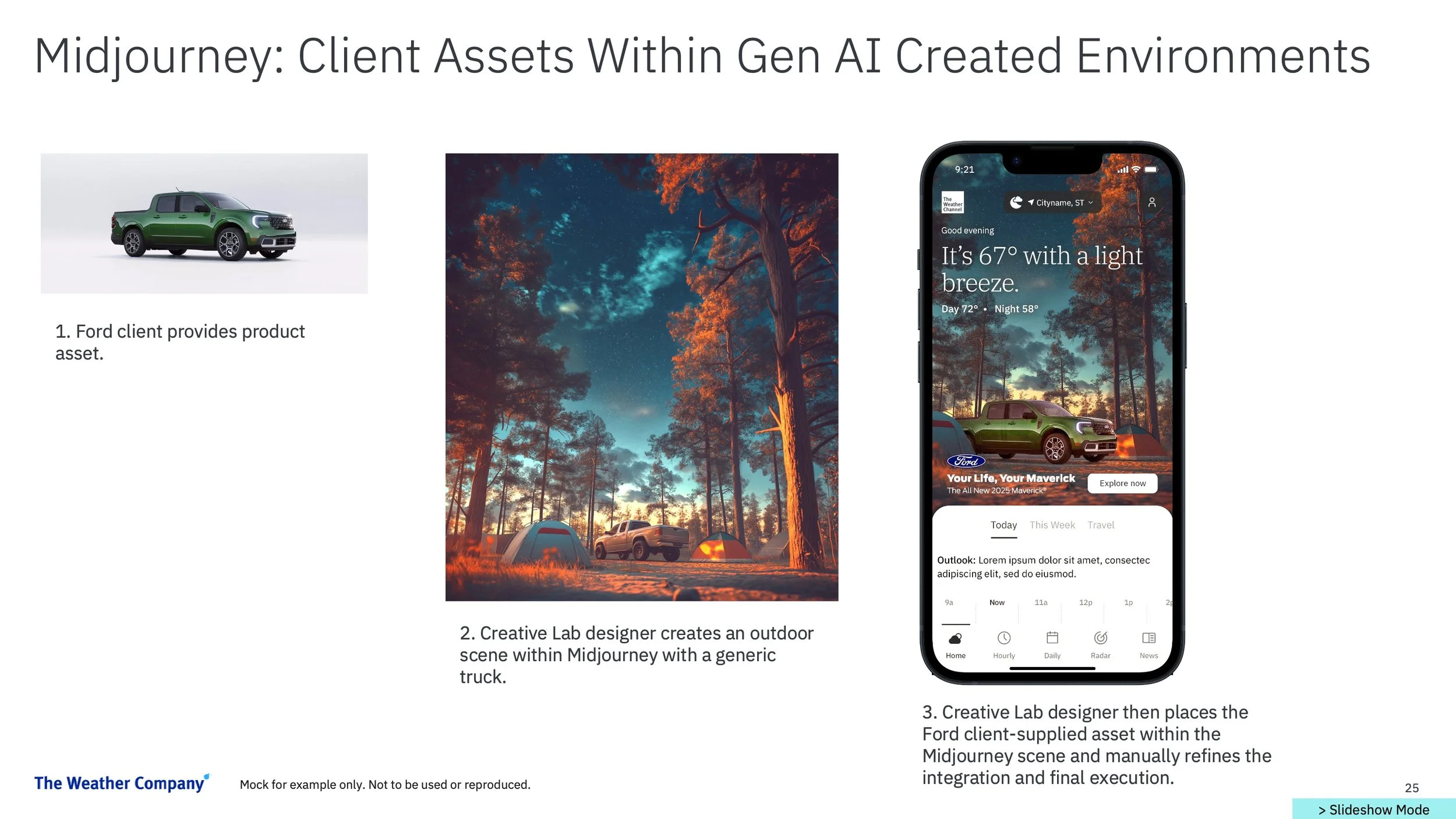

Visuals: Adobe Firefly, Midjourney (with safeguards)

Video: Runway ML, Luma AI (with safeguards)

Ongoing Innovation: Brian’s Creative Lab team actively tests and refines emerging Gen AI–powered content and ad tech solutions

Human-Guided Workflows

AI tools are used to accelerate production, not replace creative expertise

All outputs undergo human refinement and QA for brand safety and consistency

Enables faster ideation without compromising quality

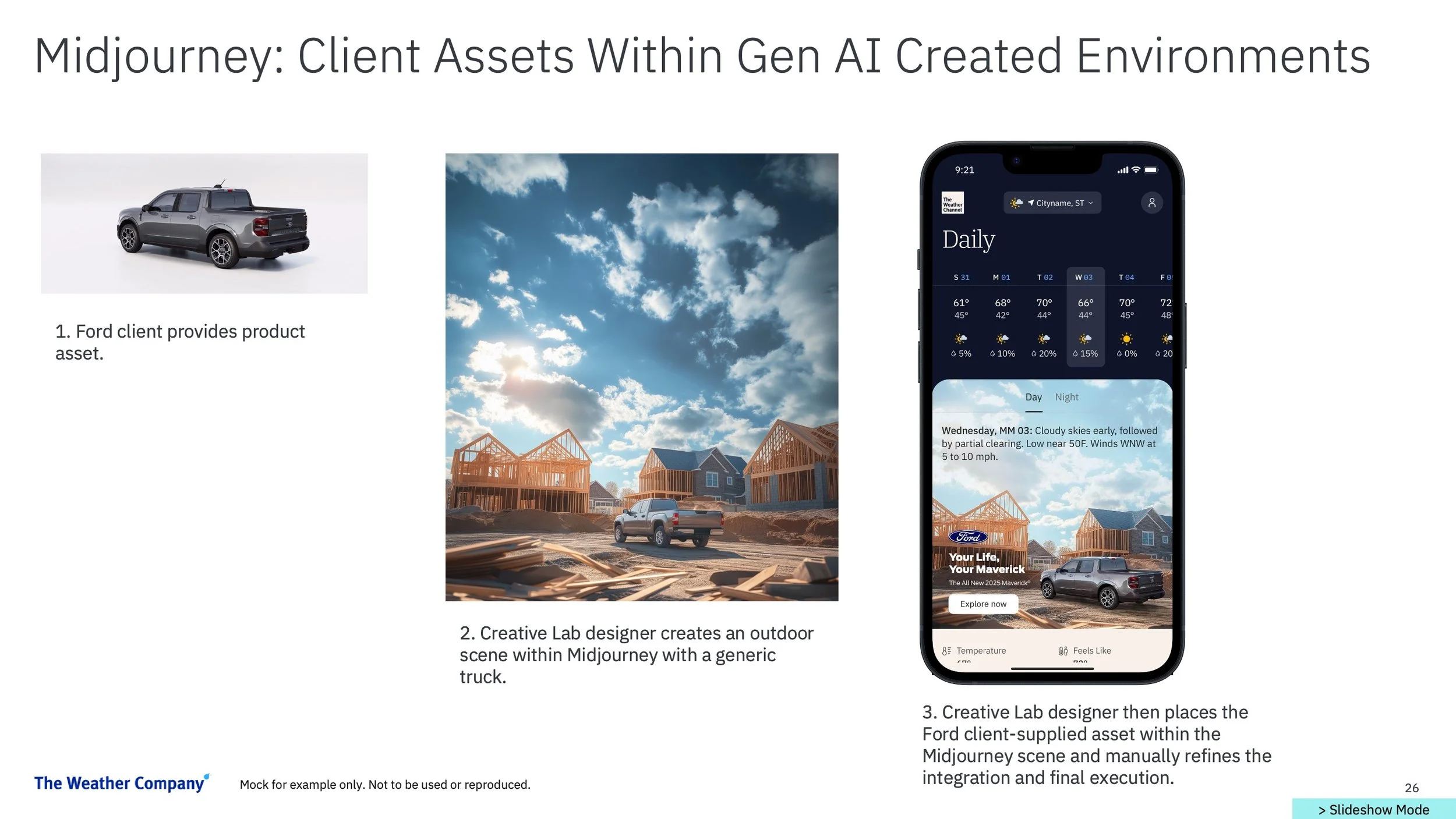

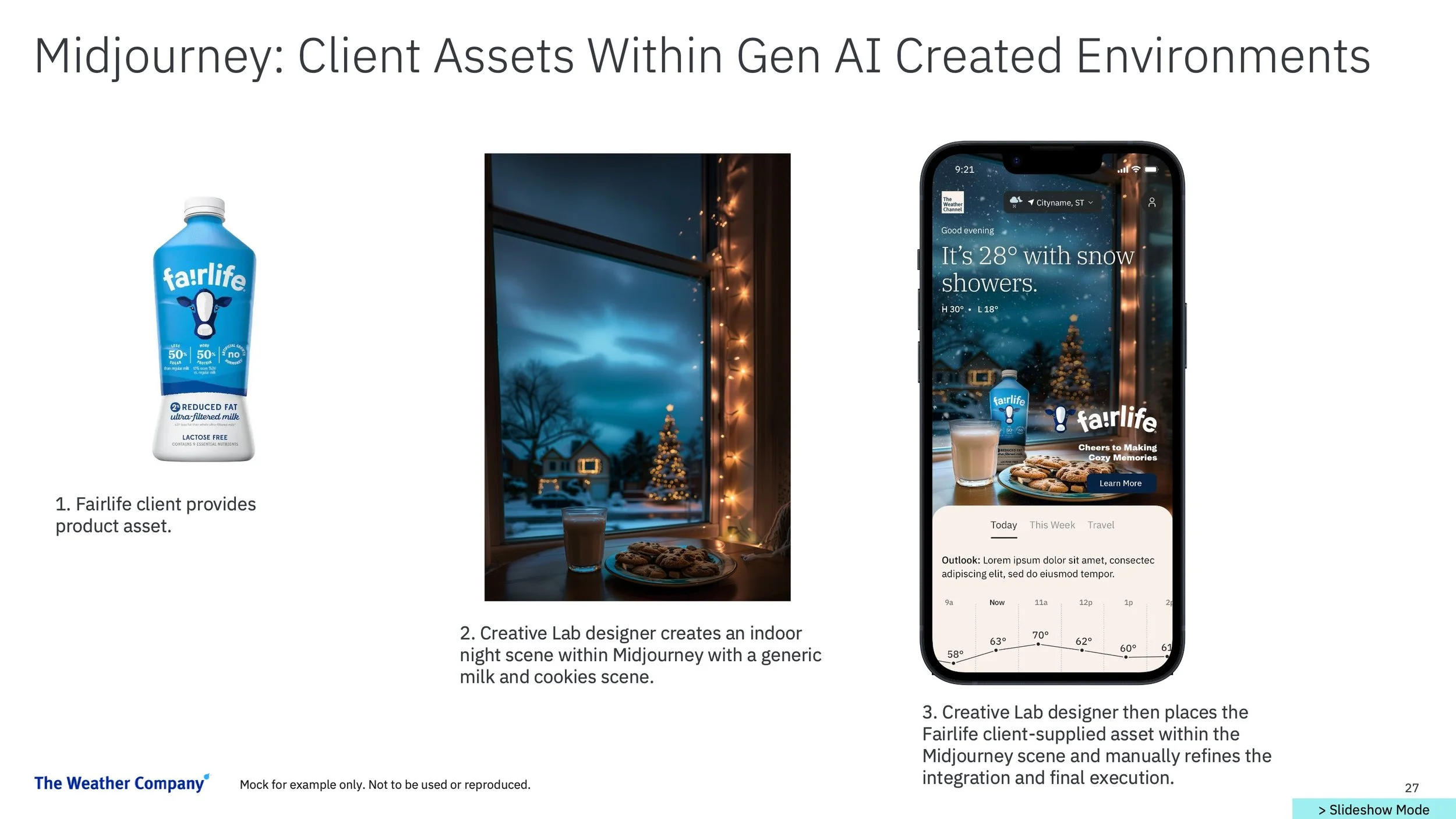

Client IP Protection

No client assets are uploaded to third-party platforms (e.g., Midjourney, Runway, Luma)

Assets are integrated manually using Adobe Creative Cloud tools

Preference is given to platforms with commercial-use protections (e.g., Adobe Firefly)

Grounded in the Foundations of Advertising

Gen AI workflows are shaped by established advertising tenets—where story planning, audience insight, and creative control still drive results.

Legacy Methods: Manual Assembly & Product Integration

The short video below demonstrates how complex branded scenes were previously assembled by hand—highlighting the time, precision, and effort once required before Gen AI-enhanced workflows.

Pioneering Hybrid Human–Machine Workflows

We’re now leading the development of hybrid creative workflows—combining human expertise with Gen AI assistance to accelerate commercial production while maintaining brand integrity.

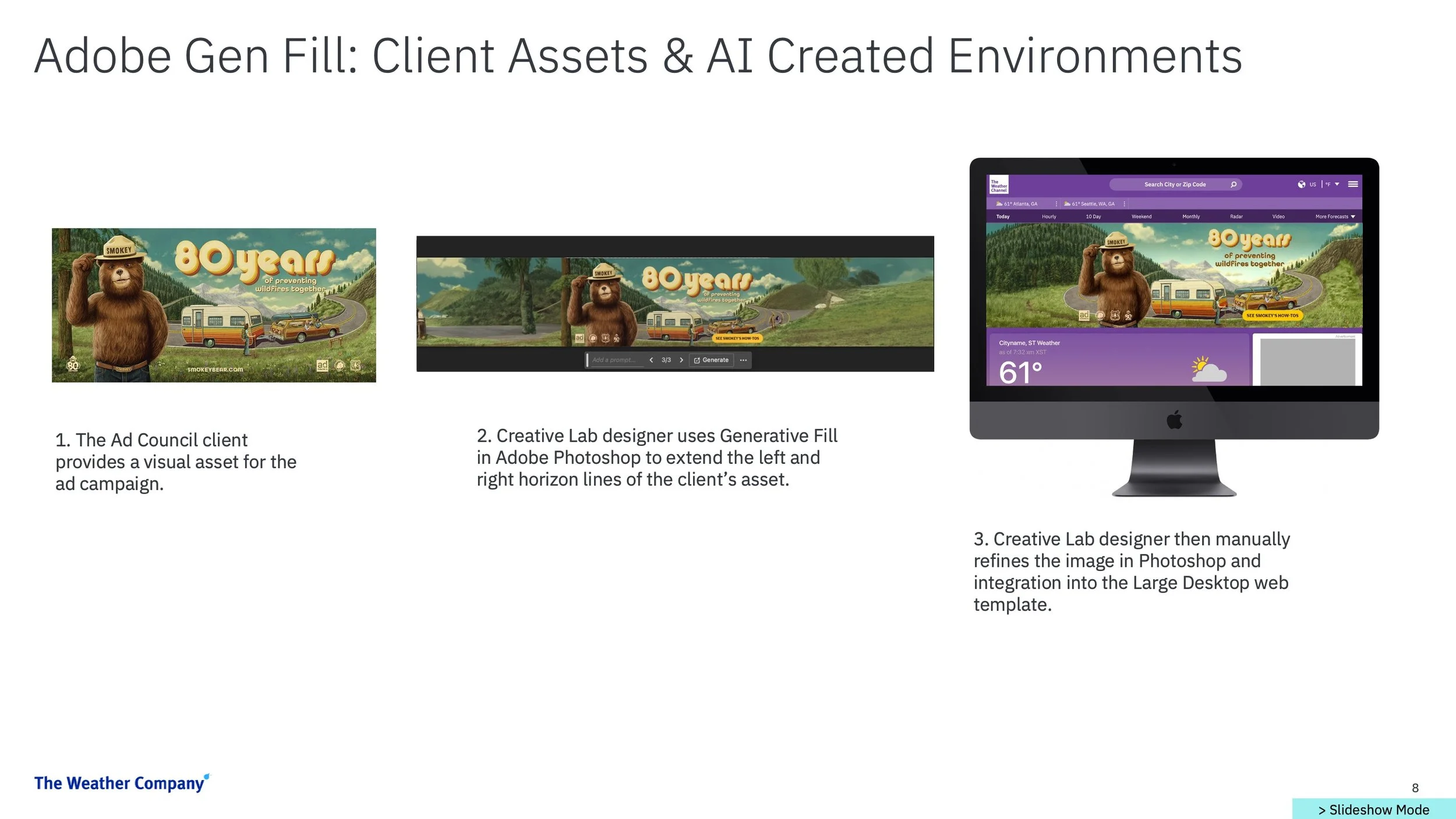

Extending Brand Assets with Visual Consistency

Watch the short video below to see how we expand on client-provided assets while staying faithful to the brand’s original visual vocabulary and design intent.

Accelerating Scene Creation for Dynamic Ad Delivery

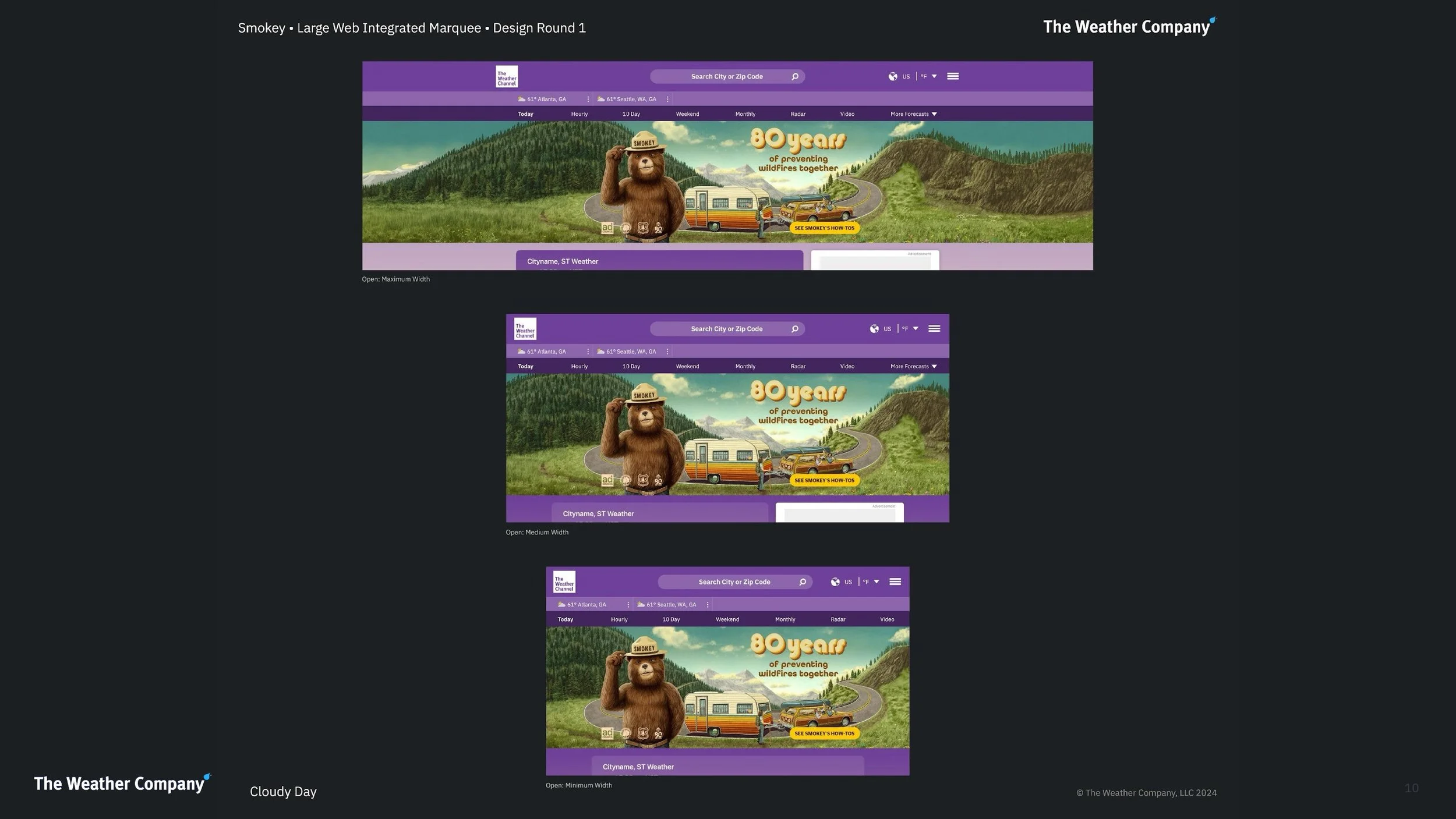

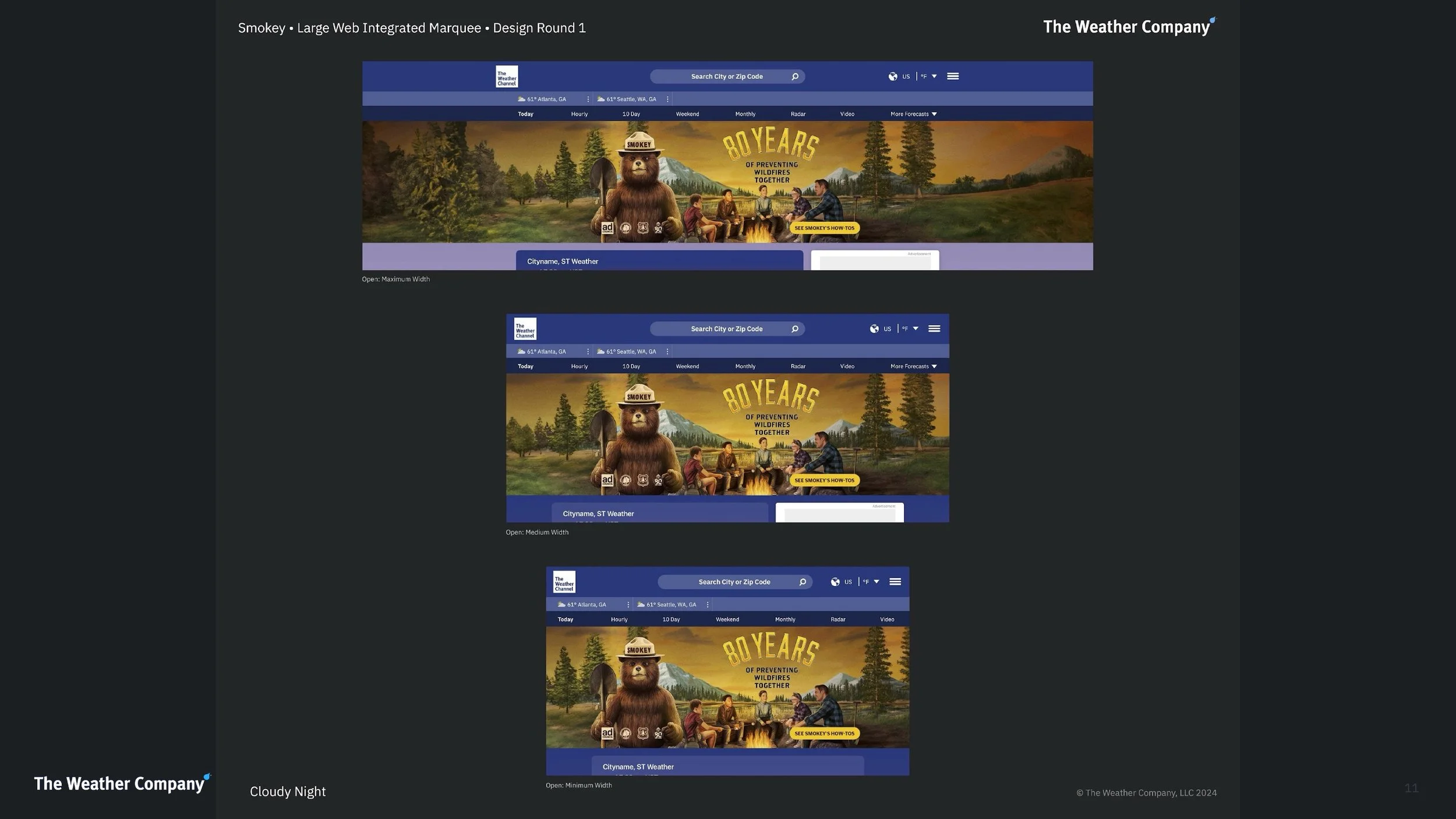

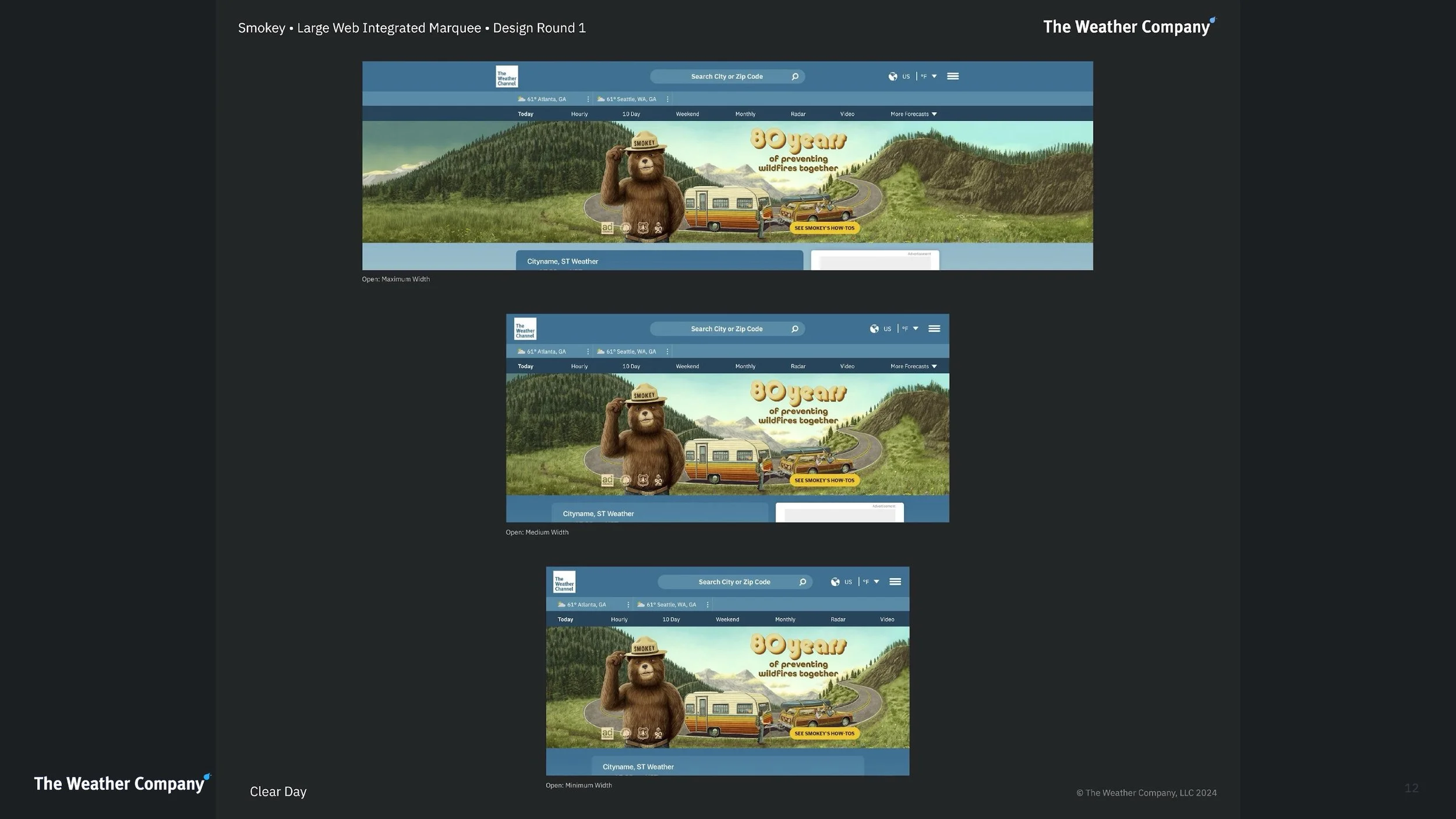

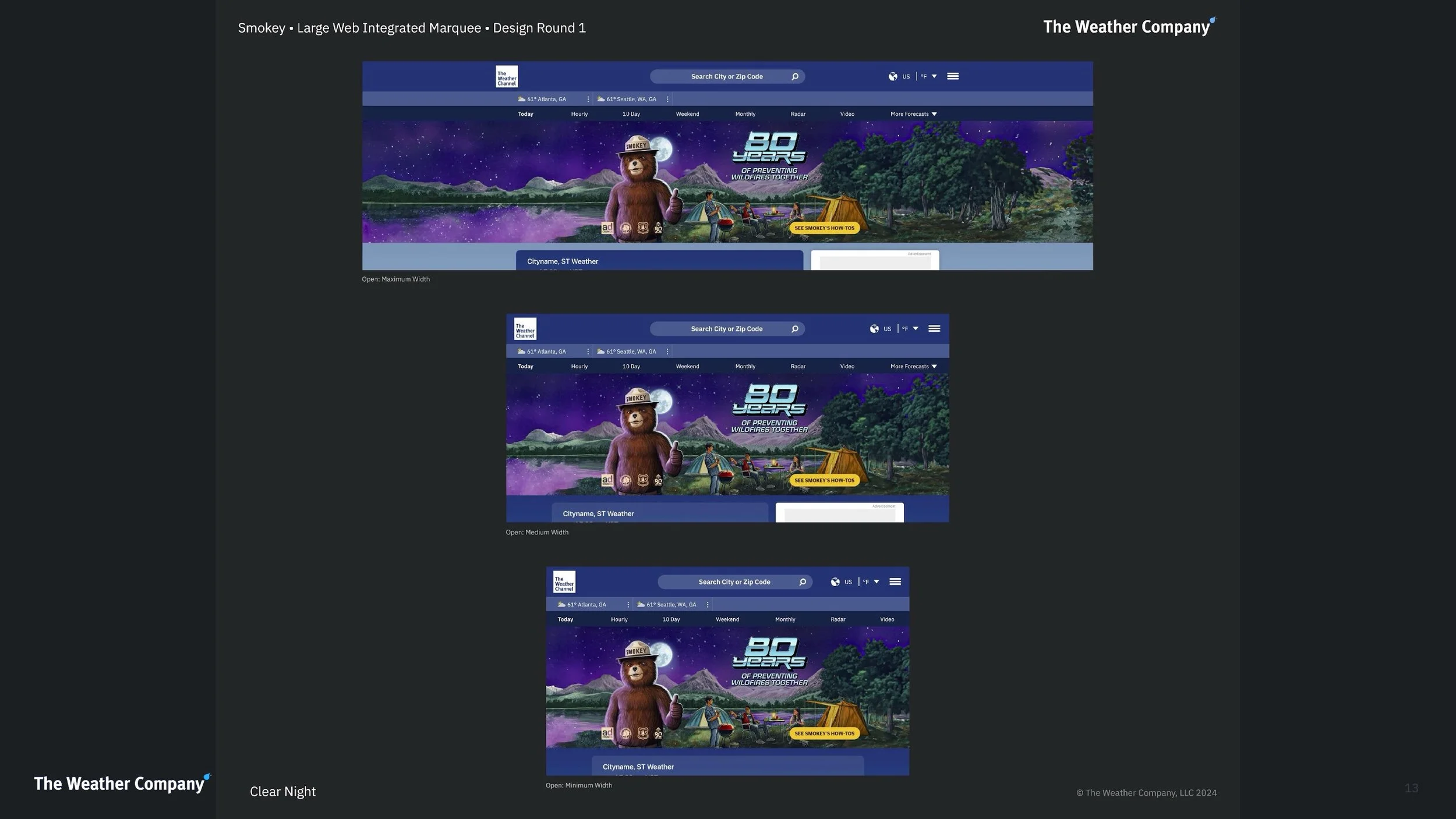

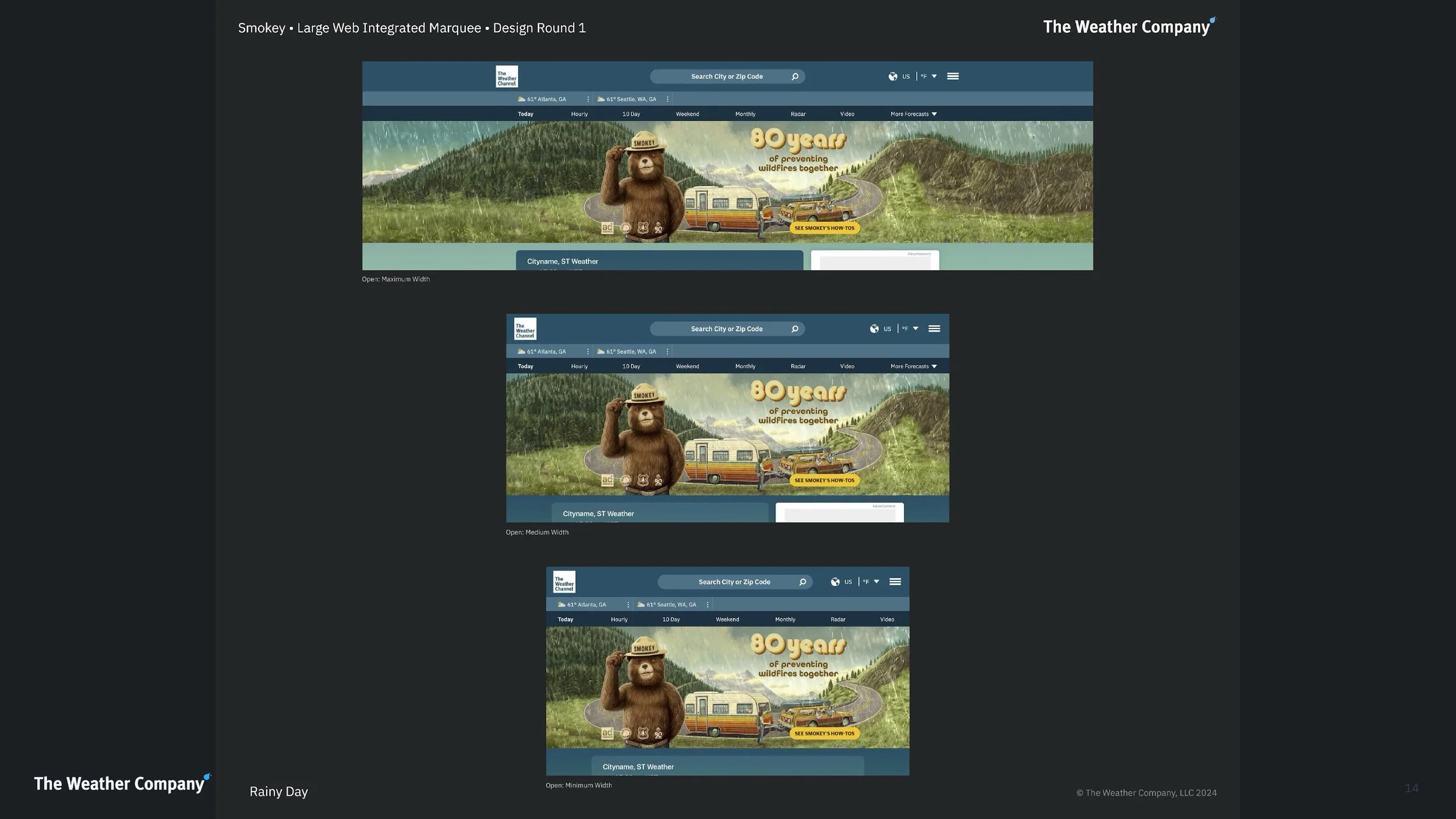

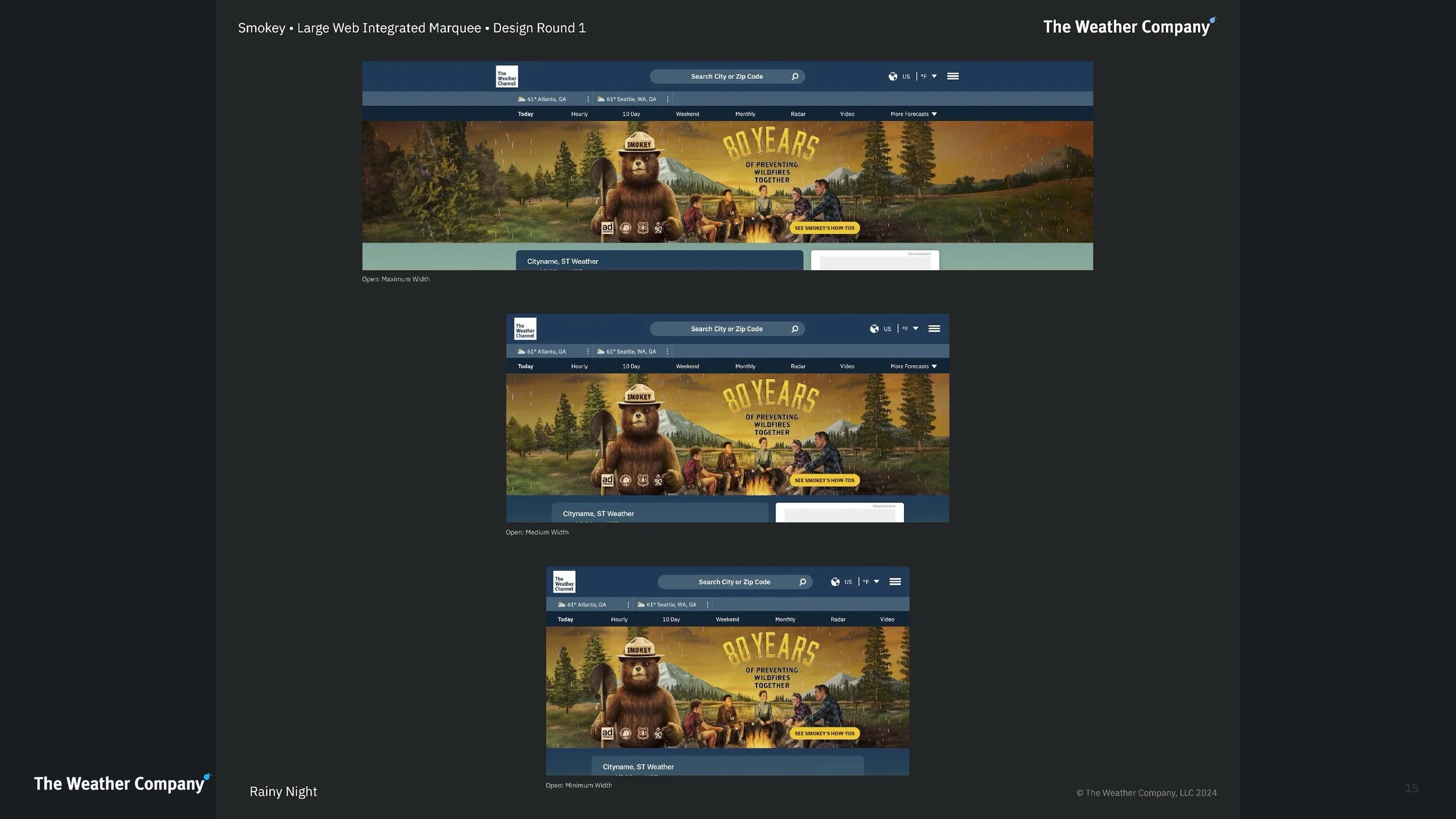

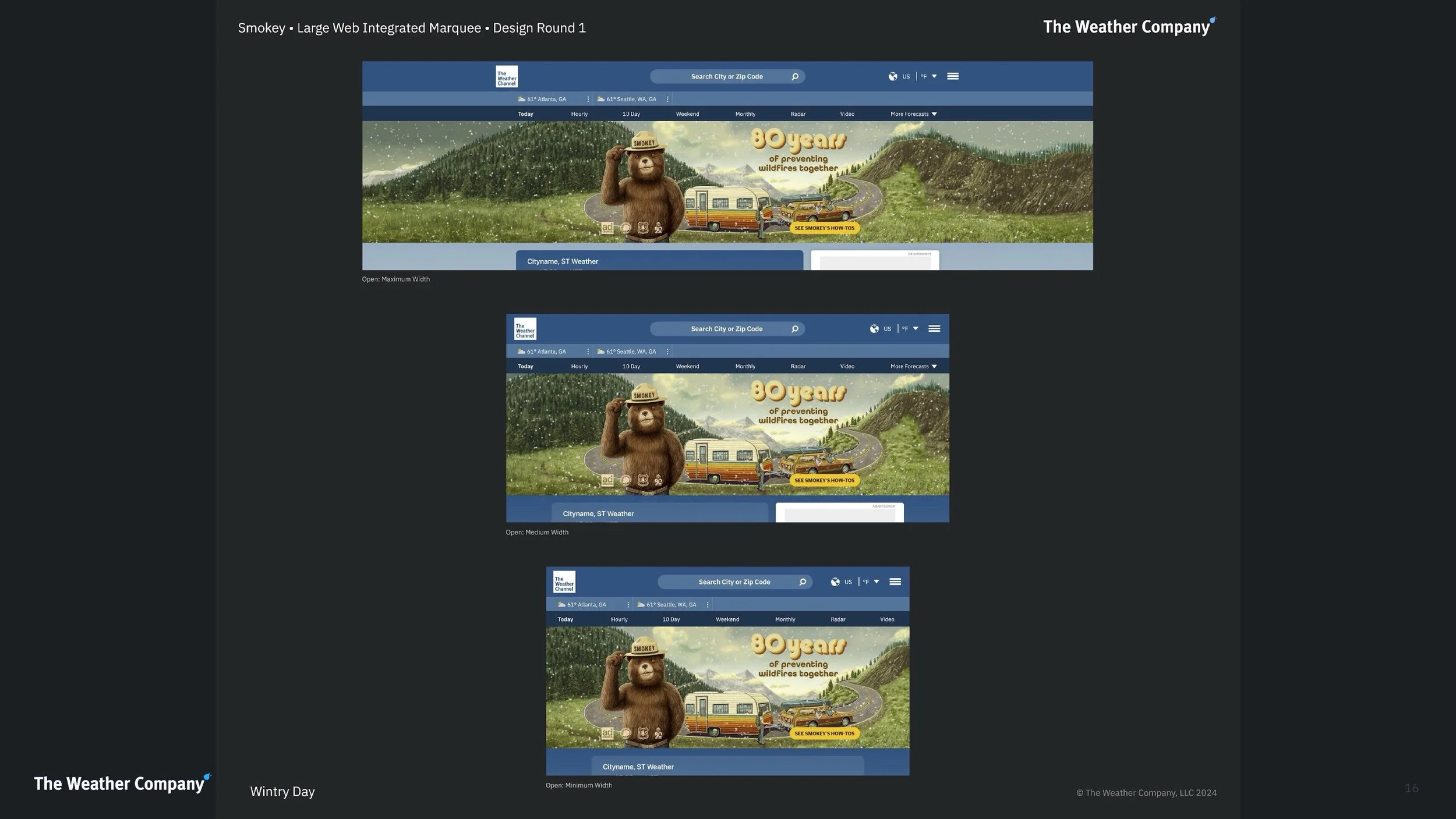

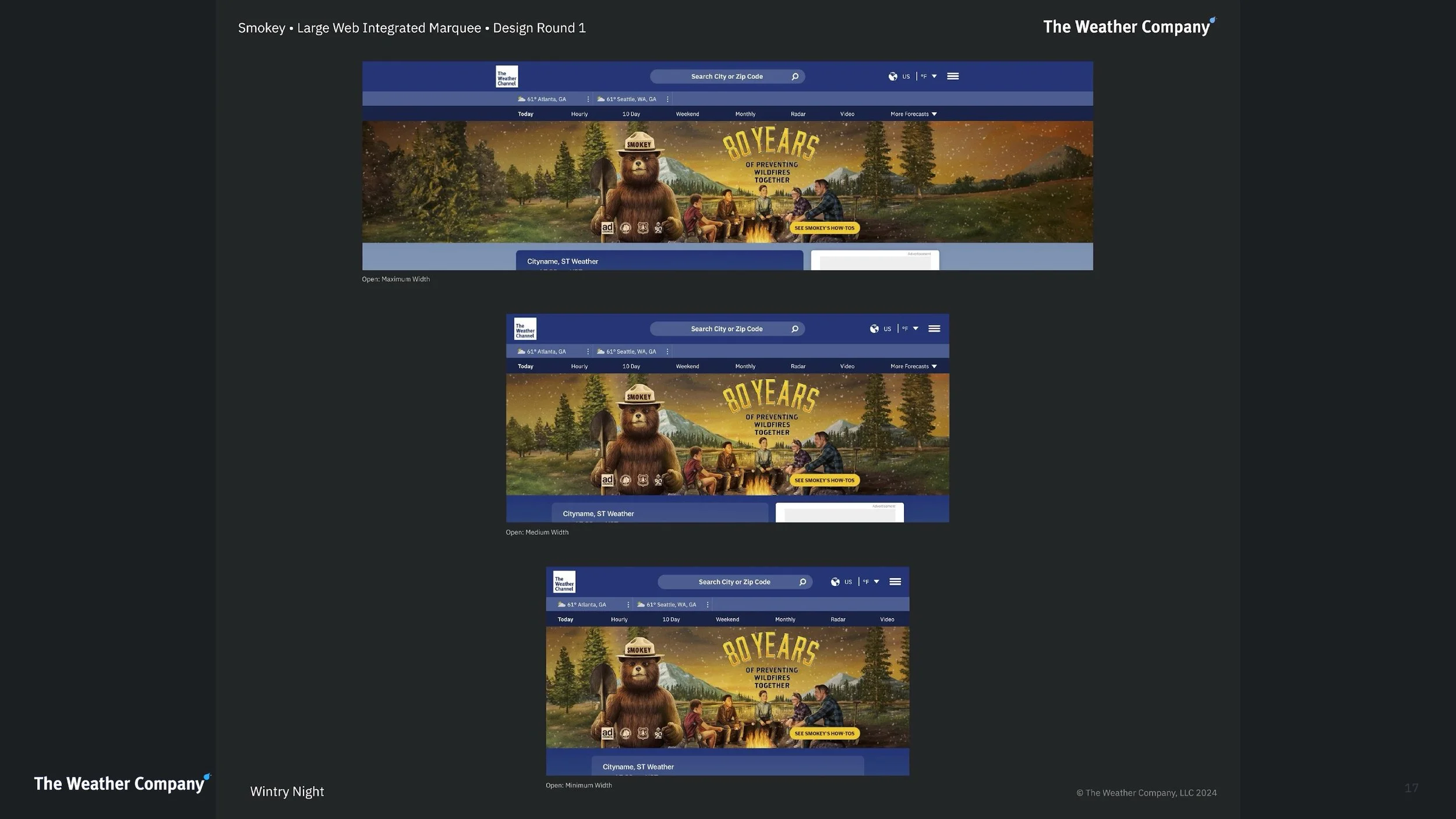

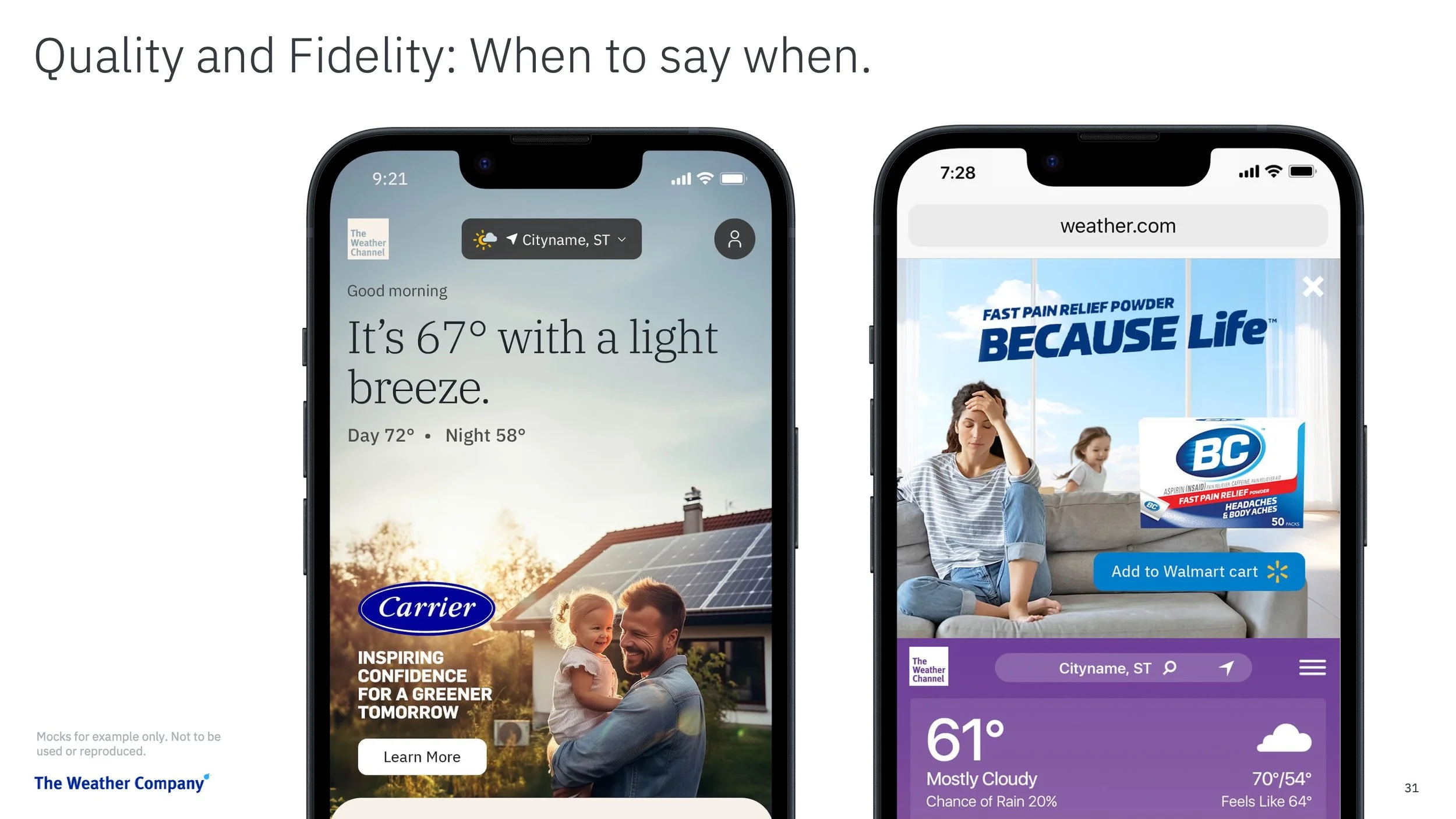

Using Gen Fill, the designer rapidly created the eight scenes below—each tailored to populate a DCO matrix serving relevant large-format web ads based on a user’s weather, location, and time of day.

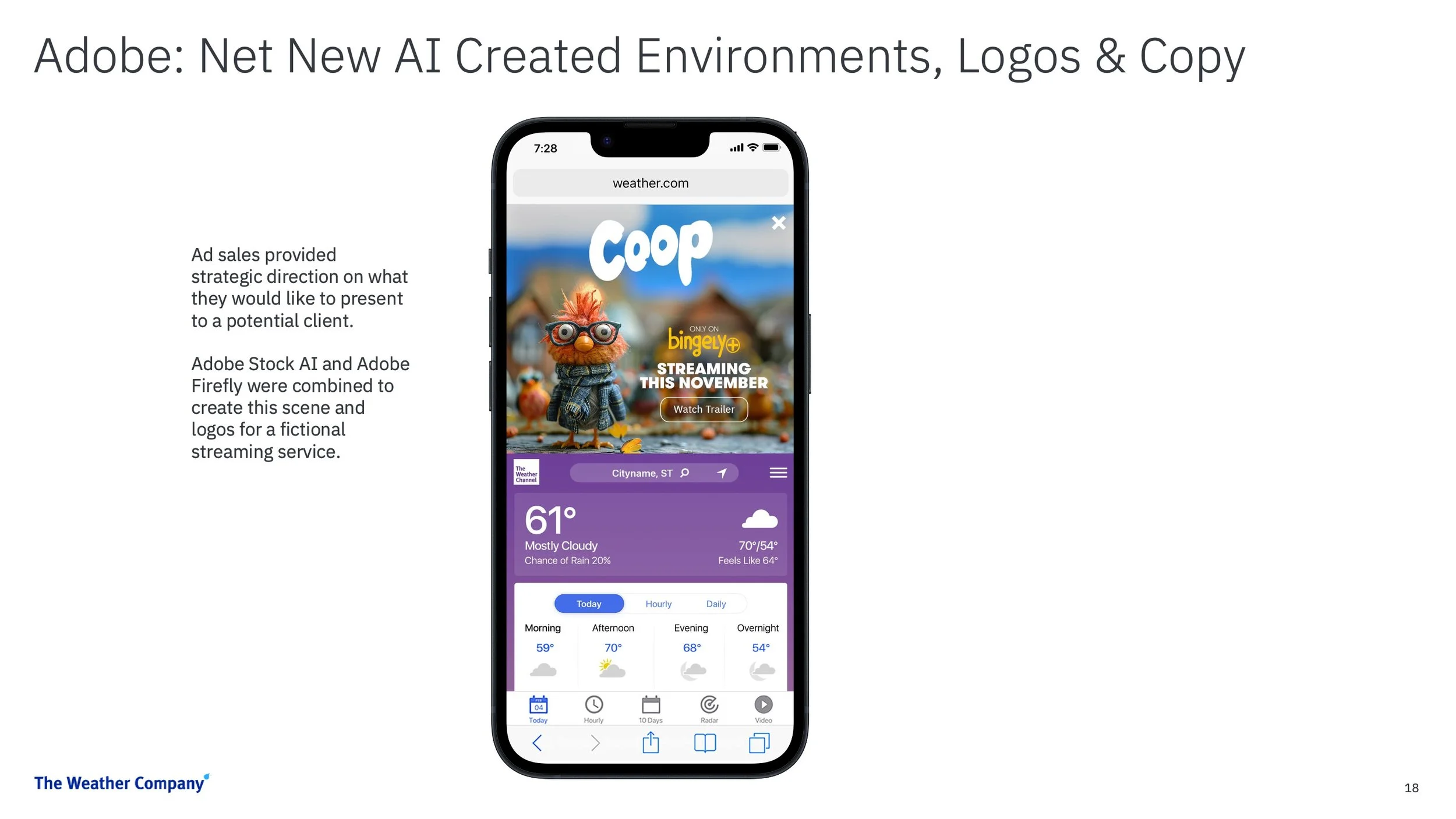

Exploring Net-New Creative Possibilities

The following examples demonstrate how we’re using Gen AI to generate entirely new graphic design experiences—extending beyond adaptation into original creative territory.

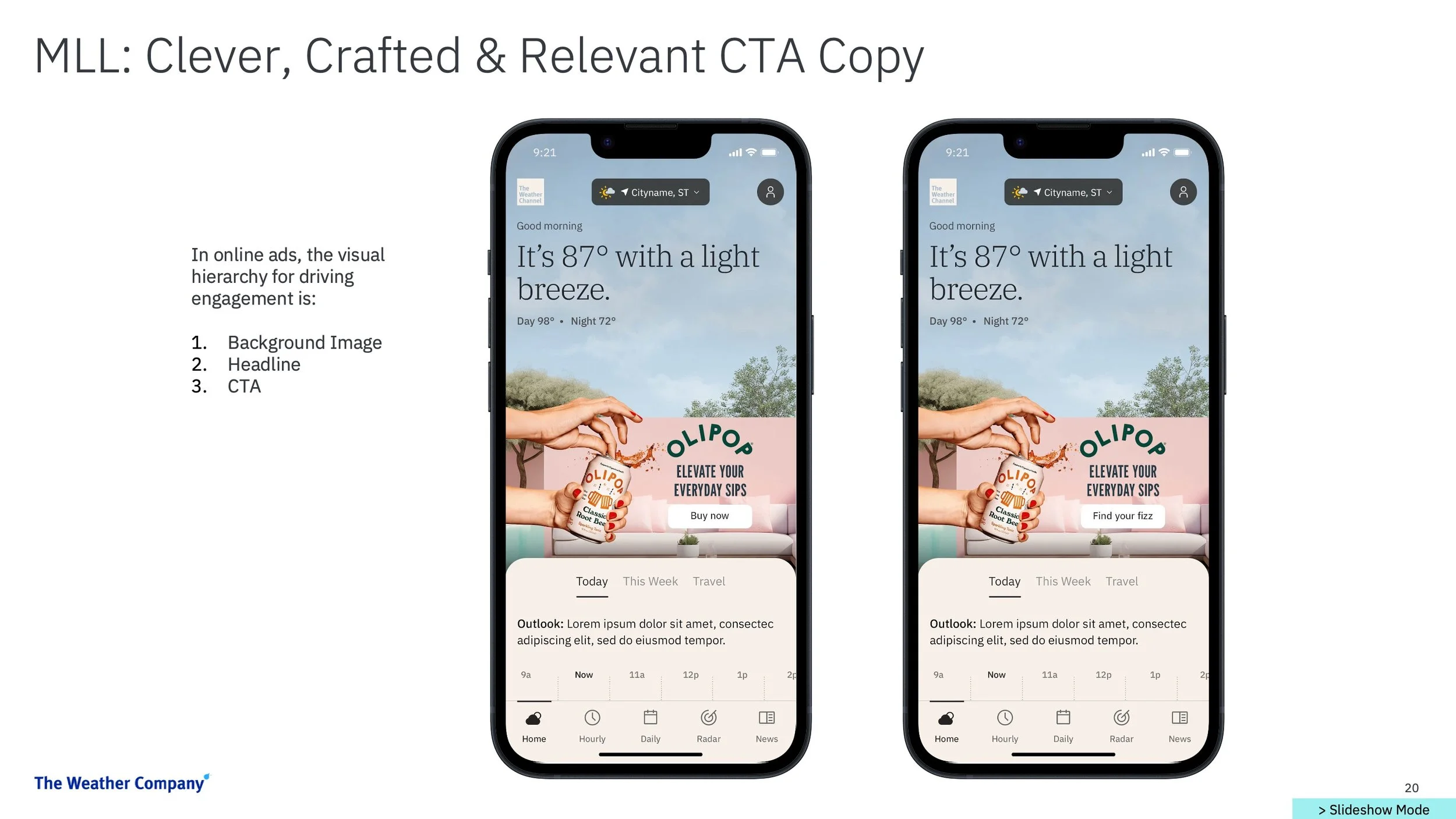

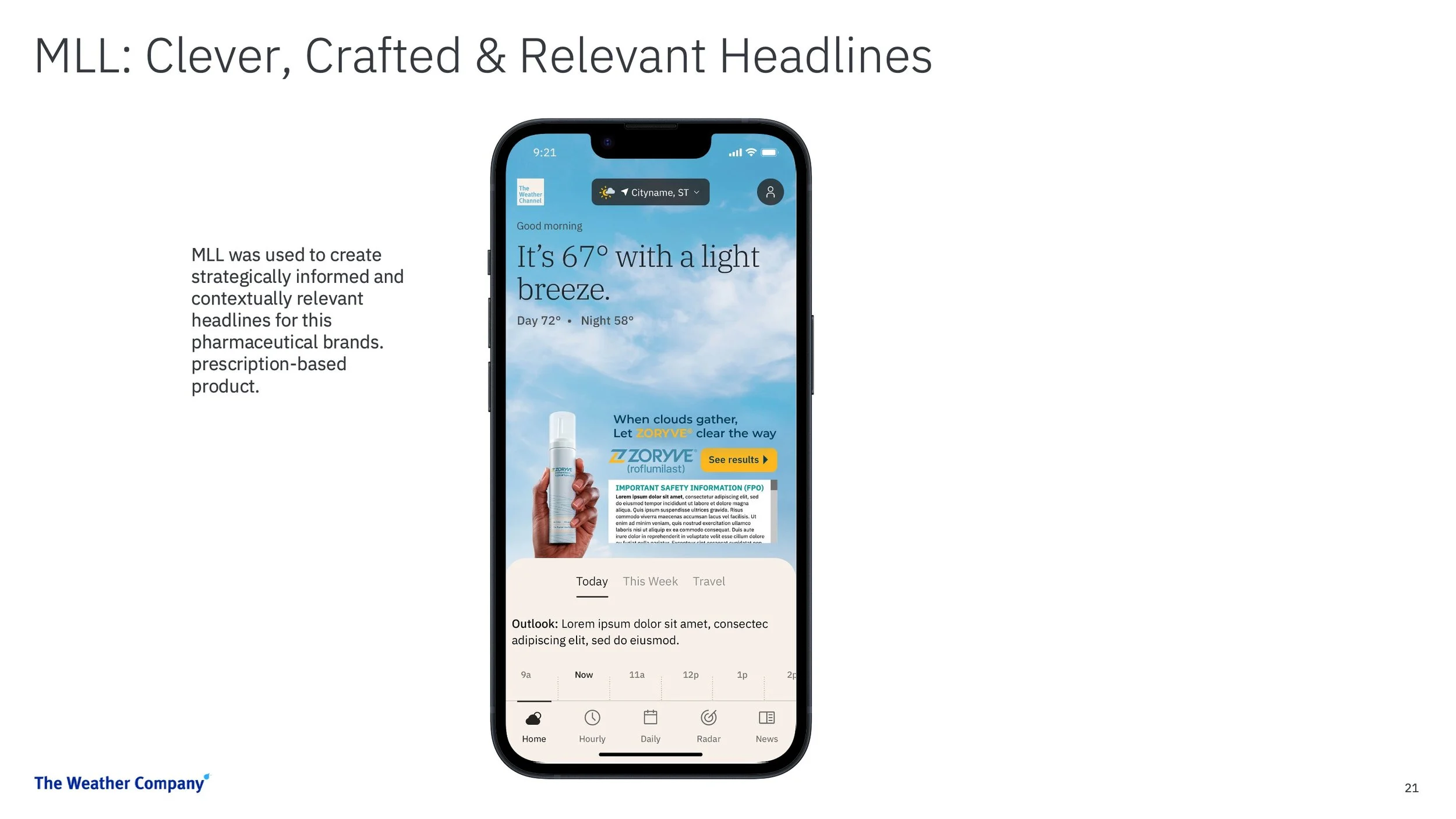

Introducing Mercury Language Lab (MLL)

MLL is The Weather Company’s proprietary, OpenAI-powered chat experience—built to safely process sensitive client and company information within a secure, siloed environment that does not feed data back into public model training.

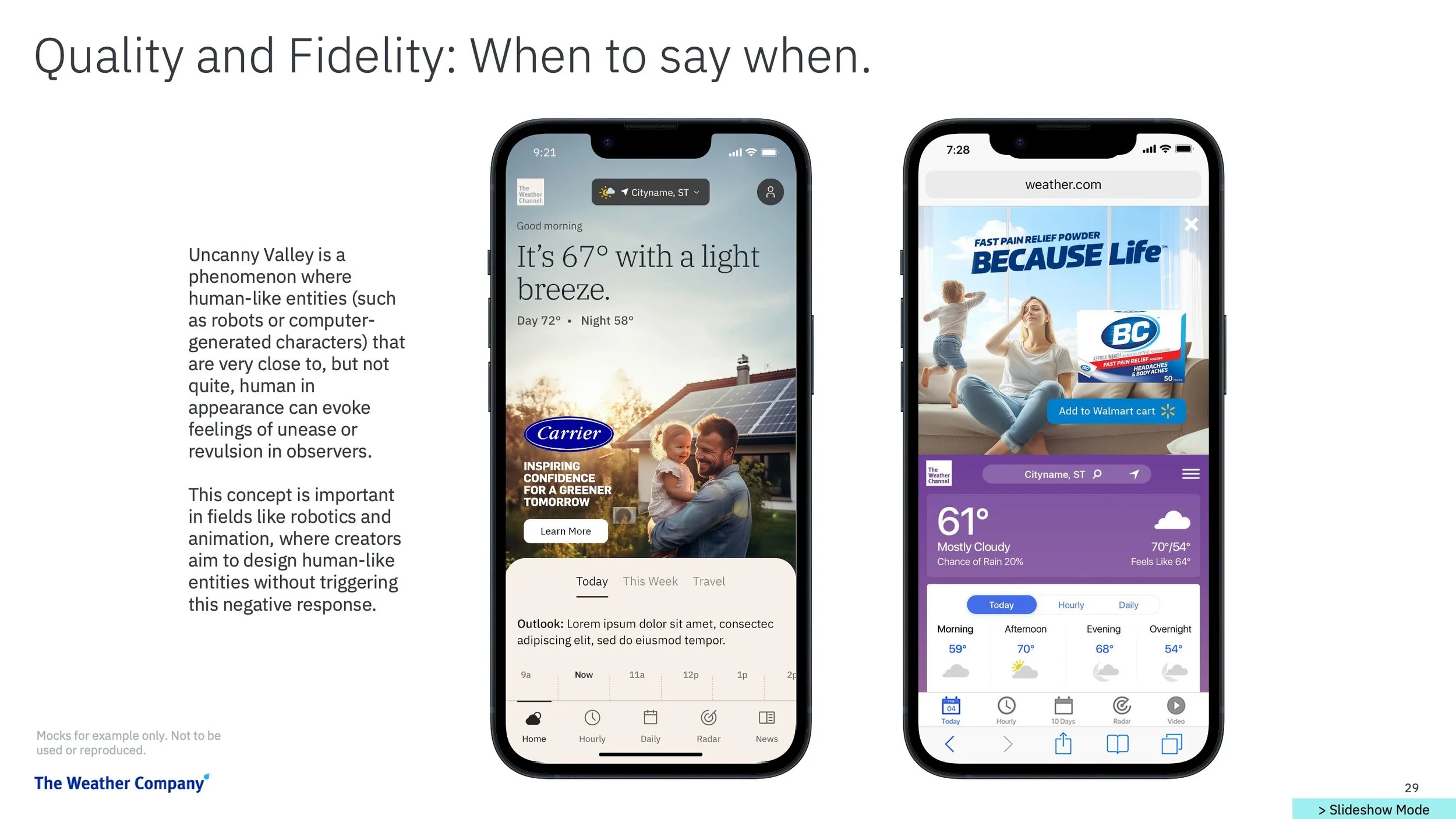

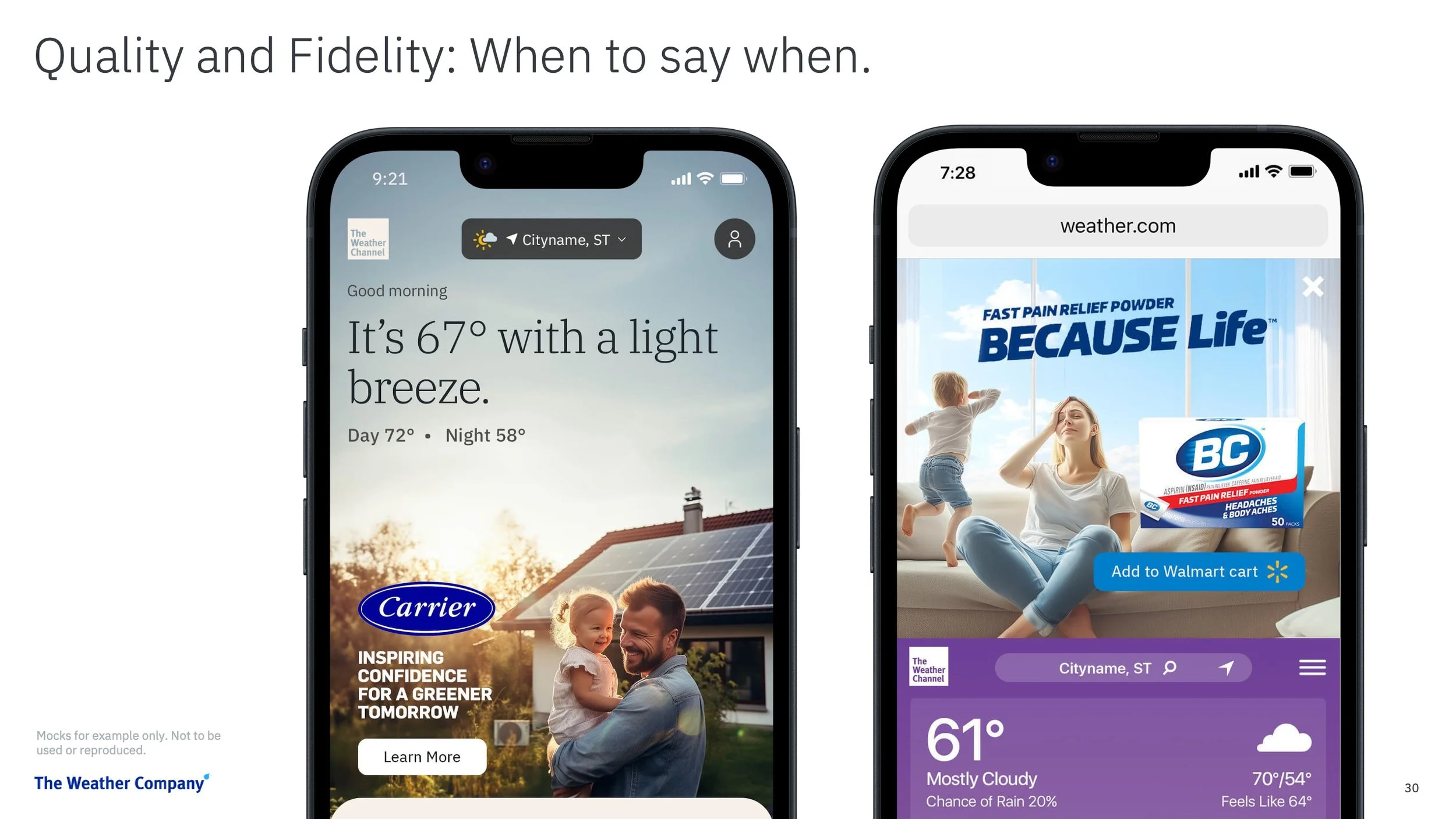

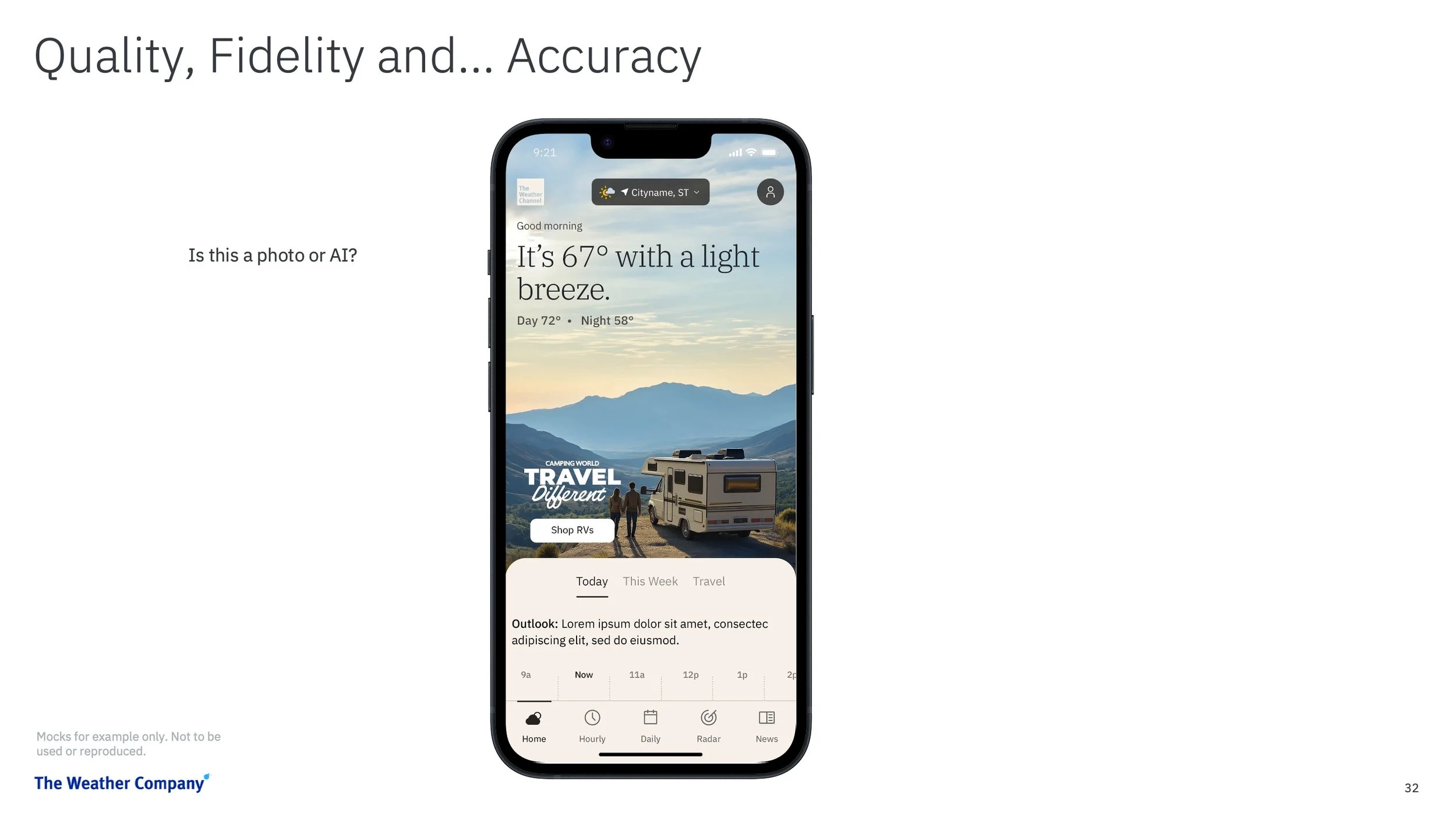

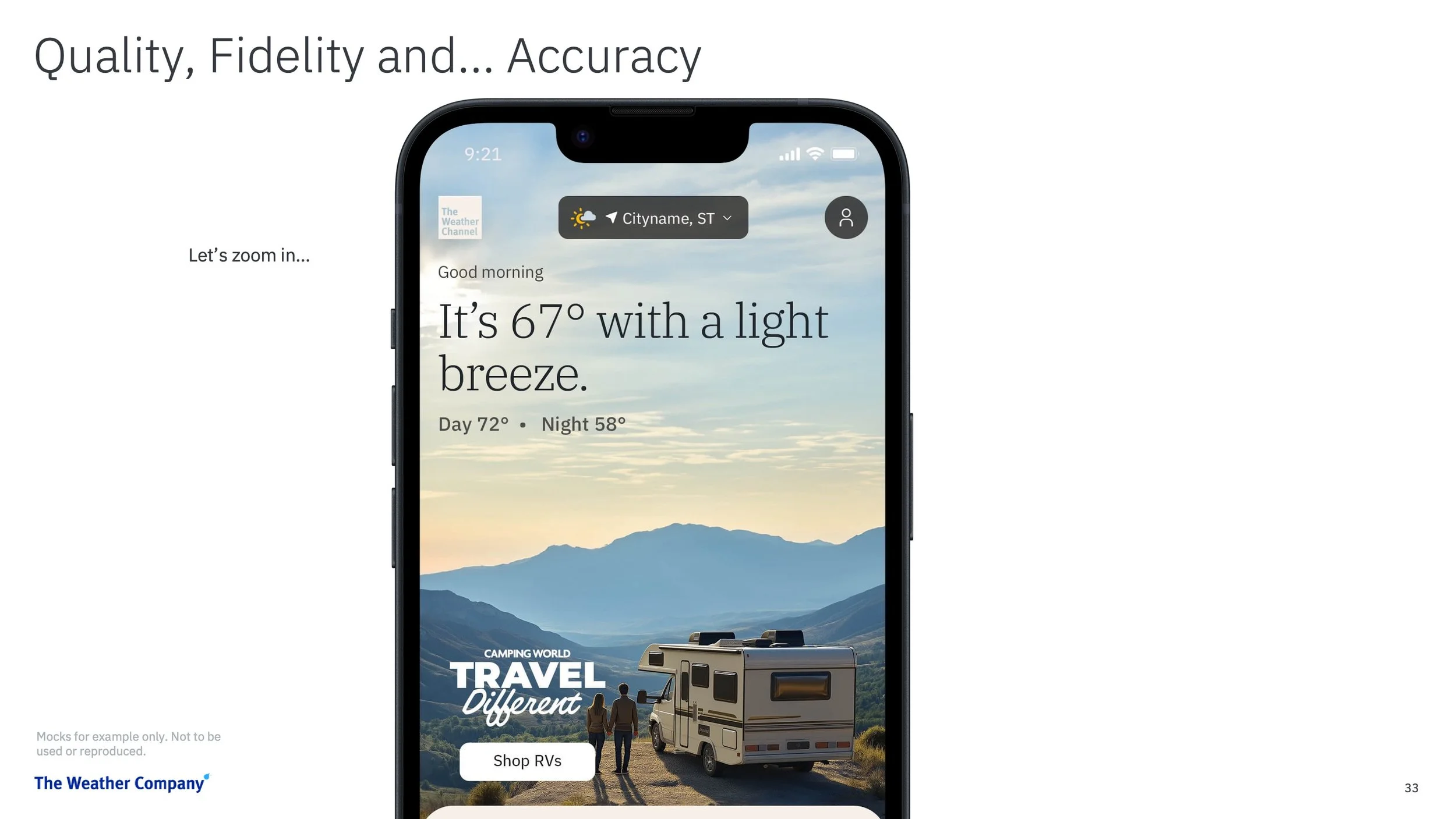

The Challenge of Spotting Subtle Inaccuracies in Gen AI Outputs

The image below is high-fidelity at first glance—but the RV design contains key inaccuracies. While obvious human errors (like six fingers or three legs) are easy to catch, domain-specific mistakes can be harder to detect without subject matter expertise.

In this case:

The RV incorrectly features two rooftop HVAC units instead of one

The entry door and awning are positioned on the driver’s side—appropriate only for left-hand traffic (LHT) countries

This underscores the need for human review, especially when visual accuracy is essential to brand integrity and audience trust.

End-to-End Content Creation with Gen AI Tools

The video examples below were created entirely by Brian’s design team using Gen AI platforms—spanning motion visuals, voiceover, background music, and sound design. Tools include Mercury Language Lab, Midjourney, Runway ML, Luma AI, Adobe Gen Fill, Eleven Labs, and Epidemic Sound.

Platforms: All content delivery and consumption platforms

Features: Graphics, Video, Motion, Animation, Music, Voice, SFX